Core Software Performance Optimization Principles

Jakob Jenkov |

This tutorial lists the core software performance optimization principles I use when working with software performance optimization. From time to time - developers run into performance problems in the software they work on. Or - you may know ahead of time that the performance requirements are high for the software you are working on. In those situations it is useful to have these core principles as a guide to where to look for performance optimization potential.

Core Software Performance Optimization Principles Tutorial Video

If you prefer video, I have a video version of this tutorial, here:

Core Software Performance Optimization Principles Tutorial Video

Potential Gains of Performance Optimization

The difference between an unoptimized and a fully optimized software system can be a factor 10 to 100 in throughput. Even a factor 1.000 if the right conditions are present.

You may not always benefit from such a performance gain - but sometimes you do.

List of Core Software Performance Optimization Performance Principles

Here are what I consider core performance optimization principles:

- Algorithmic optimization

- Implementation optimization

- Hardware aligned code optimization

- Data to CPU proximity optimization

- Data size optimization

- Minimize action overheads

- Idle time utilization

- Parallelization

- External system interaction

- Measure - don't assume!

I will get into a bit more details about each of these principles in the following sections.

(coming soon - see the video for more details until then !!!).

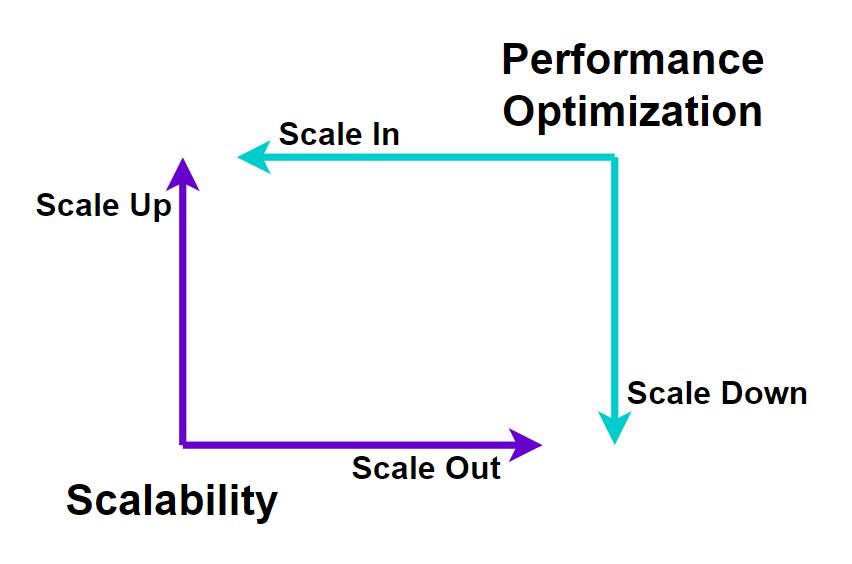

Scalability vs. Performance Optimization

When a system experiences increased load there are typically two approaches to increasing that system's capacity:

- Scale the system vertically (up) or horizontally (out)

- Optimize the performance of the code.

Both approaches may require some design changes to the software.

At first, designing for scalability and designing for performance might just seem like two similar approaches to solving the same problem. However, they are actually two very different - almost opposite approaches to solving the capacity problem:

Designing for scalability enables you to meet capacity needs by scaling up and scaling out. By adding hardware, in other words.

Designing for performance enables you to meet capacity needs while scaling down and scaling in. By removing hardware, in other words.

Put differently - designing for scalability enables you to increase capacity by increasing hardware cost - whereas designing for performance enables you to increase capacity with the same or lower hardware cost.

Scalability or Performance?

It is quite common to design for scalability first, so you can throw hardware at the capacity problem. Only if people end up needing too much hardware (too high cost) do people start thinking about performance optimization (if at all).

I prefer to design for performance first, and scalability second - for the following reasons:

First of all, a performance optimized application enables you to scale up or out later more cost effectively. Simply put, you need less hardware to meet your capacity needs if you end up having to scale up or out later.

Second, being able to meet your capacity needs with less hardware will result in a lower carbon footprint.

Third, only by learning to design for performance will you know just exactly how much capacity you can squeeze out of your hardware. Learning all the different performance techniques will teach you a lot more about algorithms and hardware, and make you a better developer overall.

| Tweet | |

Jakob Jenkov | |